Built for decisions that count.

Download our latest Responsible AI report to learn about how we've built transparency, fairness, and security into our systems.

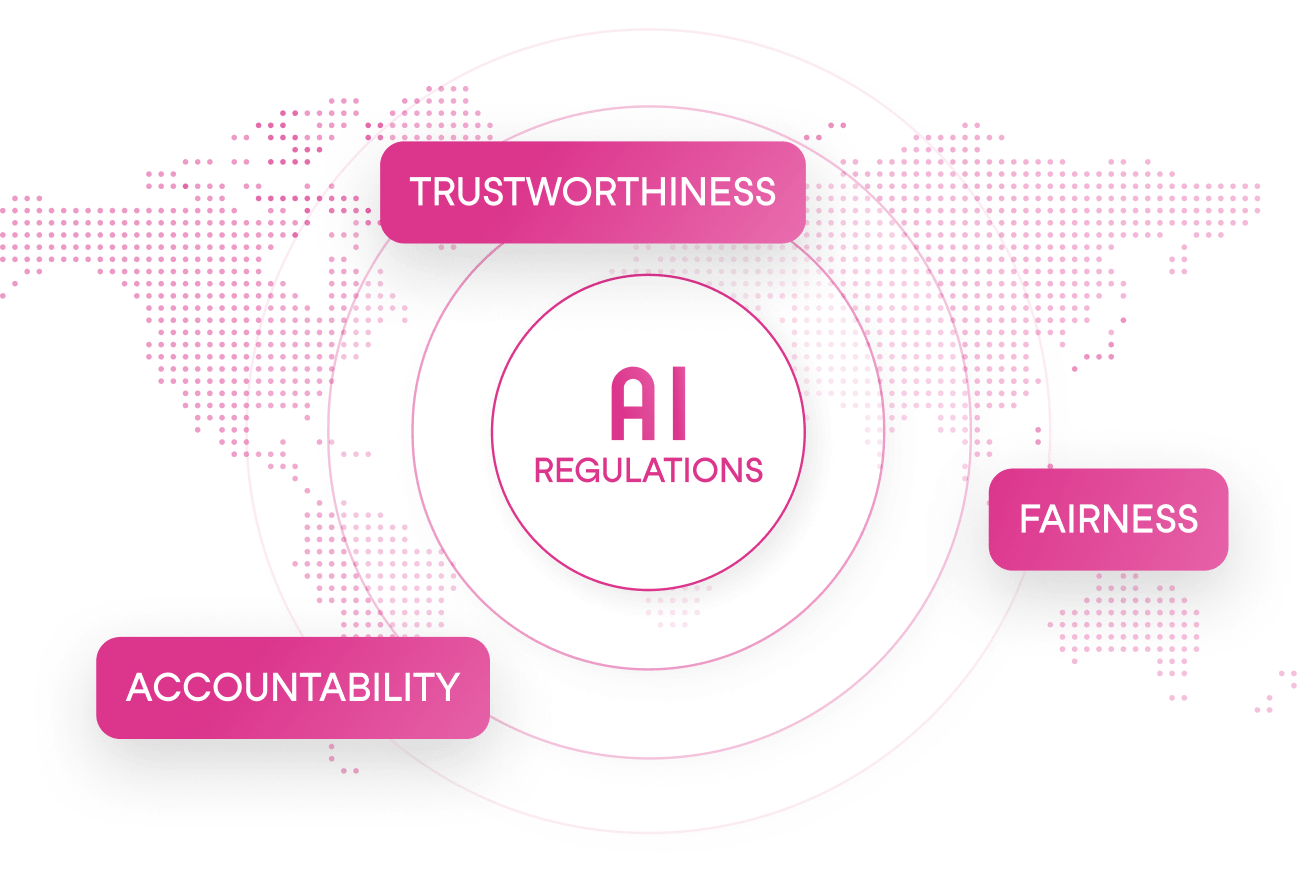

Understanding the regulatory landscape

Download this positioning paper to learn about what's happening in insurance AI regulation and the core themes across jurisdictions.

What's covered:

The key AI regulations taking effect by 2026

Trust, accountability, and fairness as the universal principles behind AI regulations

How Sixfold applies these principles across its underwriting AI

Additional Resources

Responsible AI Report 2024

Sixfold's 2024 framework for building transparent, compliant AI in underwriting. See how our approach has developed.

.avif)

Sixfold's Trust Center

Real-time visibility into how Sixfold protects data integrity, security, and compliance across our platform and practices.

![Text on pink background reading: '[Webinar] How to Secure Your AI Compliance Team’s Approval'.](https://cdn.prod.website-files.com/645ba9e7460d485c17387d8c/69141504e4525cc2dabe5c54_Image%20(2).avif)

Compliance Webinar

Colorado's Deputy Commissioner for P&C Insurance, a legal expert, and Sixfold's CEO on safely implementing AI in insurance.