Sixfold's Approach to AI Fairness & Bias Testing

As AI becomes more embedded in the insurance underwriting process, carriers, vendors, and regulators share a growing responsibility to ensure these systems remain fair and unbiased.

At Sixfold, our dedication to building responsible AI means regularly exploring new and thoughtful ways to evaluate fairness.1

We sat down with Elly Millican, Responsible AI & Regulatory Research Expert, and Noah Grosshandler, Product Lead on Sixfold's Life & Health team, to discuss how Sixfold is approaching fairness testing in a new way.

Fairness As AI Systems Advance

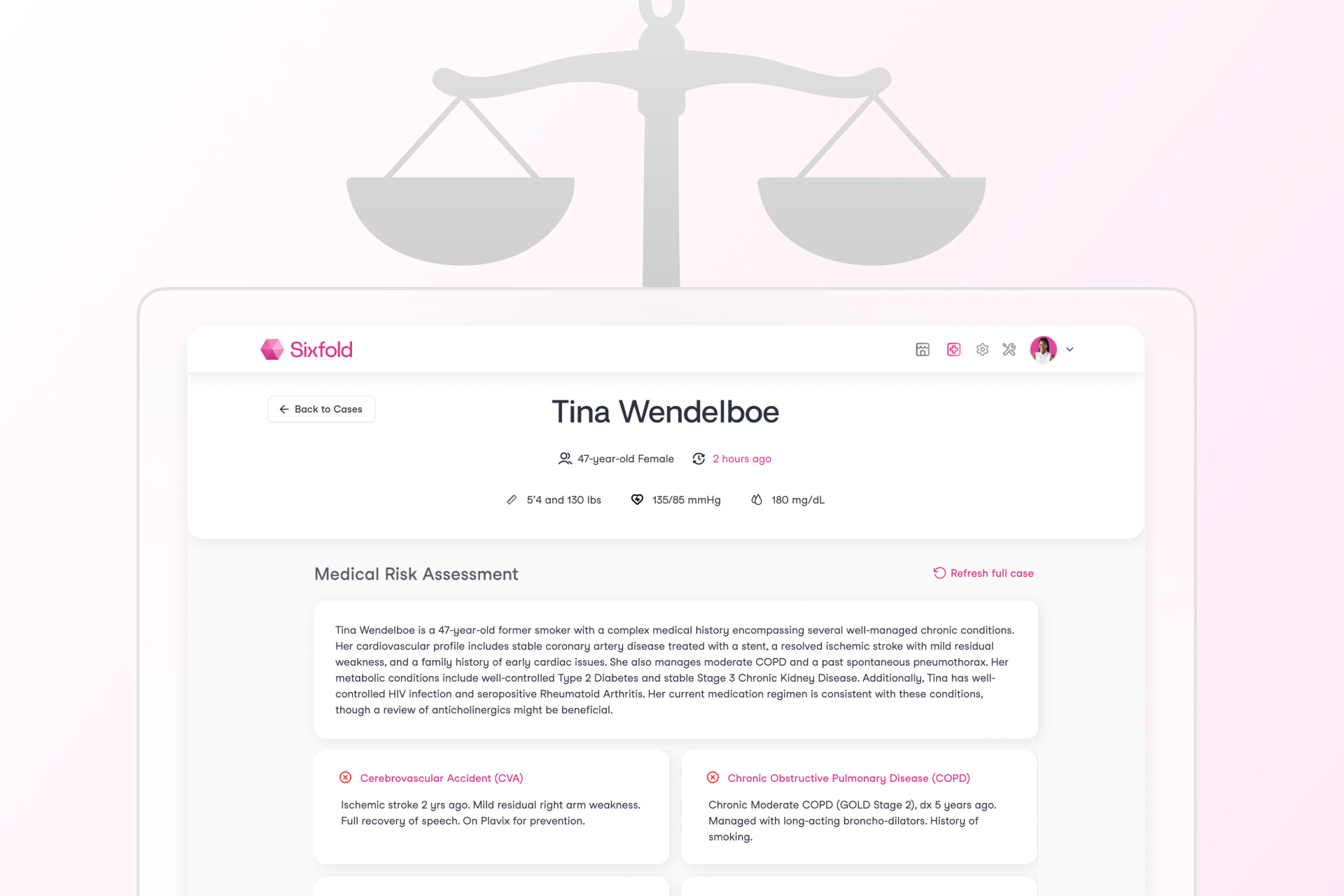

Fairness in insurance underwriting isn’t a new concern, but testing for it in AI systems that don’t make binary decisions is.

At Sixfold, our Underwriting AI for life and health insurers don’t approve or deny applicants. Instead, it analyzes complex medical records and surface relevant information based on each insurer's unique risk appetite. This allows underwriters to work much more efficiently and focus their time on risk assessment, not document review.

“We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

— Elly Millican, Responsible AI & Regulatory Research Expert

While that’s a win for underwriters, it complicates fairness testing. When your AI produces qualitative outputs such as facts and summaries, rather than scores and decisions, most traditional fairness metrics won’t work. Testing for fairness in this context requires an alternative approach.

“The academic work around fairness testing is very focused on traditional predictive models, however Sixfold is doing document analysis,” explains Millican. “We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

“The academic work around fairness testing is very focused on traditional predictive models, however Sixfold is doing document analysis,” explains Millican. “We needed to develop new methodologies for fairness testing that reflect how Sixfold works.”

“Even selecting which facts to pull and highlight from medical records in the first place comes with the opportunity to introduce bias. We believe it’s our responsibility to test for and mitigate that,” Grosshandler adds.

While regulations prohibit discrimination in underwriting, they rarely spell out how to measure fairness in systems like Sixfold’s. That ambiguity has opened the door for innovation, and for Sixfold to take initiative on shaping best practices and contributing to the regulatory conversation.

A New Testing Methodology

To address the challenge of fairness testing in a system with no binary outcomes, Sixfold is developing a methodology rooted in counterfactual fairness testing. The idea is simple: hold everything constant except for a single demographic attribute and see if and how the AI’s output changes.2

“Ultimately we want to validate that medically similar cases are treated the same when their demographic attributes differ,”

— Noah Grosshandler, Product Manager @Sixfold

“We start with an ‘anchor’ case and create a ‘counterfactual twin’ who is identical in every way except for one detail, like race or gender. Then we run both through our pipeline to see if the medical information that’s presented in Sixfold varies in a notable or concerning way” Millican explains.

“Ultimately we want to validate that medically similar cases are treated the same when their demographic attributes differ,” Grosshandler states.

Proof-of-Concept

For the initial proof-of-concept, the team is focused on two key dimensions of Sixfold’s Life & Health pipeline.

1. Fact Extraction Consistency

Does Sixfold extract the same facts from medically identical underwriting case records that differ only in one protected attribute?

2. Summary Framing and Content Consistency

Does Sixfold produce diagnosis summaries with equivalent clinical content and emphasis for medically identical underwriting cases?

“It’s not just about missing or added facts, sometimes it’s a shift in tone or emphasis that could change how a case is perceived,” Millican explains. “We want to be sure that if demographic details are influencing outputs, it’s only when clinically appropriate. Otherwise, we risk surfacing irrelevant information that could skew decisions.”

Expanding the Scope

While the team’s current focus is on foundational fairness markers (race and gender), the methodology is designed to evolve. Future testing will likely explore proxy variables such as ZIP codes, names, and socioeconomic indicators, which might implicitly shape model behavior.

“We want to get into cases where the demographic signal isn’t explicit, but the model might still infer something. Names, locations, insurance types, all of these could serve as proxies that unintentionally influence outcomes,” Millican elaborates.

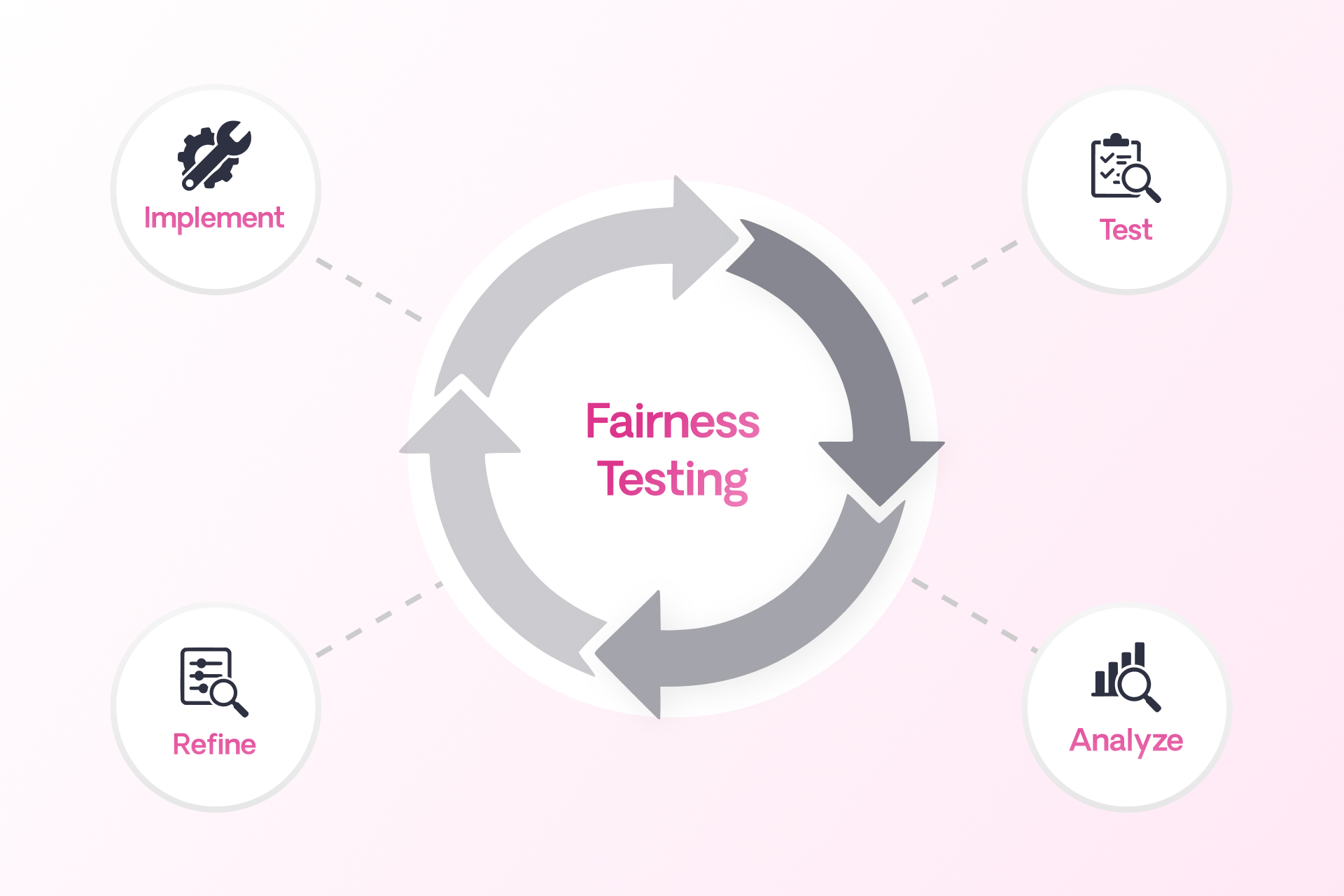

The team is also thinking ahead to version control for prompts and model updates, ensuring fairness testing keeps pace with an evolving AI stack.

“We’re trying to define what fairness means for a new kind of AI system,” explains Millican. “One that doesn’t give a single output, but shapes what people see, read, and decide.”

Sixfold isn’t just testing for fairness in isolation, it’s aiming to contribute to a broader conversation on how LLMs should be evaluated in high-stakes contexts like insurance, healthcare, finance, and more.

That’s why Sixfold is proactively bringing this work to the attention of regulatory bodies. By doing so, we hope to support ongoing standards development in the industry and help others build responsible and transparent AI systems.

“This work isn’t just about evaluating Sixfold, it’s about setting new standards for a new category of AI." Grosshandler concludes.

“This work isn’t just about evaluating Sixfold, it’s about setting new standards for a new category of AI. Regulators are still figuring this out, so we’re taking the opportunity to contribute to the conversation and help shape how fairness is monitored in systems like ours,” Grosshandler concludes.

Positive Regulatory Feedback

When we recently walked through our testing methodology and results with a group of regulators focused on AI and data, the feedback was both thoughtful and encouraging. They didn’t shy away from the complexity, but they clearly saw the value in what we’re doing.

“The fact that it’s hard shouldn’t be a reason not to try. What you’re doing makes sense... You’re scrutinizing something that matters.” said one senior policy advisor.

“The fact that it’s hard shouldn’t be a reason not to try. What you’re doing makes sense... You’re scrutinizing something that matters.”

— Senior Policy Advisor

One of the key themes that came up during the meeting was the unique nature of generative AI, and why it demands a different kind of oversight. As one senior actuary and behavioral data scientist put it: “Large language models are more qualitative than quantitative... A lot of technical folks don’t really get qualitative. They’re used to numbers. The more you can explain how you test the language for accuracy, the more attention it will get.”

That comment really resonated. It reflects the heart of our approach, we’re not just tracking metrics. We’re evaluating how language evolves, how facts can shift, and how risk is framed and communicated depending on the inputs.

The Road Ahead

Fairness in AI isn’t a fixed destination, it’s an ongoing commitment. Sixfold’s work in developing and refining fairness and bias testing methodologies reflects that mindset.

As more organizations turn to LLMs to analyze and interpret sensitive information, the need for thoughtful, domain-specific fairness methods will only grow. At Sixfold, we’re proud to be at the forefront of that work.

Footnotes

1While internal reviews have not surfaced evidence of systemic bias, Sixfold is committed to continuous testing and transparency to ensure that remains the case as we expand and refine our AI systems.

2To ensure accuracy, cases involving medically relevant demographic traits, like pregnancy in a gender-flipped case, are filtered out. The methodology is designed to isolate unfair influence, not obscure legitimate medical distinctions.